The human brain is a fascinating lump of electrified meat.

We haven't even come close to understanding ourselves yet. You know the old adage that states we "only use 10% of our brain"? Eh yeah well 90% of our brain are cells called glia and they act as the insolation and shape of the brain... so imagine making the claim we can just get rid of all that, strip all the plastic from the wires and expect the device not to short circuit. Not how it works.

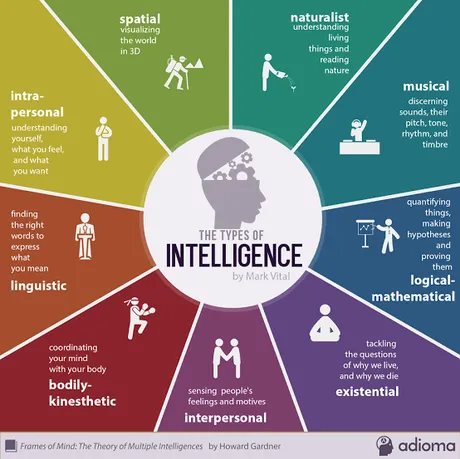

All brains work a little differently. Genetically we seem to roll the dice and get a certain type that could be completely different from our parents. Some people are more analytical. Some people are quite good at memorization. Some are great at being intuitive and predicting the truth with only a little information at hand. Some have high emotional intelligence. Others have strong brain-to-body connections and amazing coordination. All of these skills are learned and honed and everyone has a natural raw ability to improve those skills. It is this diversity that makes the world go round and allows society to excel and specialize.

However it's not all sunshine and rainbows.

There's always a way to get tripped up because the world is so complex. For example the human eye can't actually see a 3-dimensional object. The brain uses context clues like, light, shadow, and size to simply guess at what is being seen. Famously there is an aboriginal tribe in the jungle that had never seen things from far away. Someone showed them an elephant off in the distance and they refused to believe it could possibly an elephant because it was so small. They drove to the elephant and his mind was blown, how was it so tiny before?

Humans have excellent pattern recognition. It's a very necessary survival skill. The drawback to this is that we start seeing patterns that aren't there (with AI we call this "hallucination"). Some things are just completely random, and the human brain doesn't like random, so we often make things up to fill the vacuum and fail to see the bigger picture.

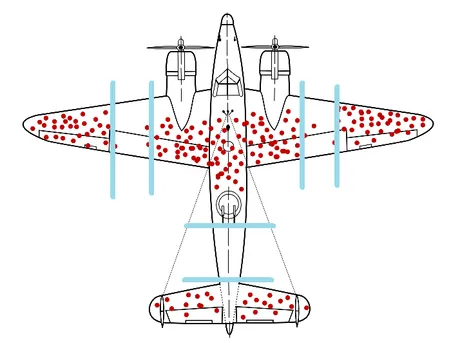

Survivorship Bias

This is one I covered just recently. We have a tendency to focus on the winners, heroes, and survivors of the story rather than rather than the entire dataset. This has a tendency to result in wildly false conclusions. Within the context of crypto a good example is meme-coins. You see someone up a million dollars on a meme-coin and think buying meme-coins is the way to go. You didn't focus on the other thousands of lottery losers that got rugged. This creates an incomplete dataset in which most people are huge winners, which is completely false.

Unit Bias

This is a big one I've referenced at least a dozen times over the years. Our brains give us a hit of dopamine as a reward when we complete a task. This causes unit-bias. We will overeat a meal to complete a meal. We will want to buy 1 Bitcoin and if we can't afford it, it's "too expensive" so we buy none. We will buy a million shitcoins under a penny and think it's a good deal without regard to the market cap or total coins in circulation or even use-case or roadmap. Our metric for "a lot of money" has been $1M for decades even though $1M was worth quite a bit more decades ago. These milestones give us that dopamine hit so we assign a greater value to the milestone even if it's logically irrelevant to do so.

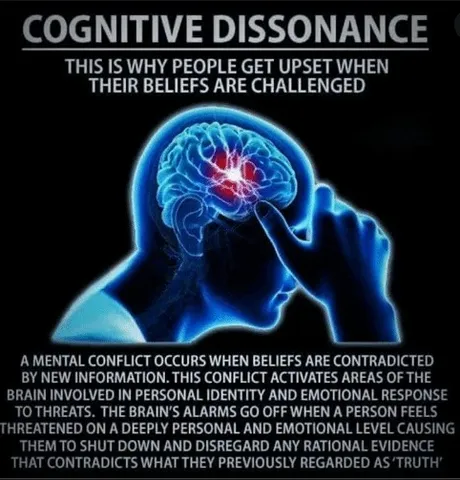

Cognitive Dissonance

This is an extremely common and dangerous one. I post this meme often. When new information conflicts with what we already know we have a tendency to ignore or deny the new information no matter how obvious or true that data happens to be. Our brains build a fortress of information with regard to how the world works. If someone tries to kick out a foundational pillar of that fortress it will often be interpreted as a personal and threatening attack on the self (ego).

The ability to change one's mind is a skill, but it is also not a skill that every person should have with infinite capacity. There are real benefits to some people getting set in their ways and not being wishy washy about new information. We call this ossification. These data filters are critically important for all of us. Sometimes they are wrong, and that's just the cost of doing business.

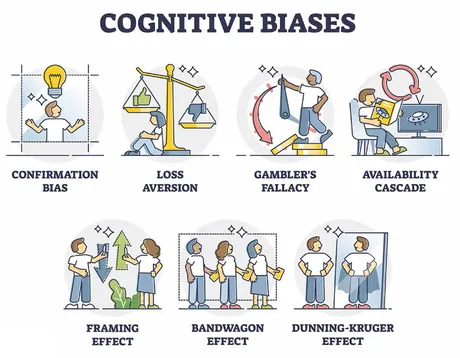

Confirmation Bias

The cousin of cognitive dissonance, confirmation bias is basically the opposite idea. Rather than filtering out information that conflicts with our viewpoint we tend to avoid filtering out information that is in alignment with our perspective. Sometimes it doesn't matter how flimsy or wrong that piece of information is, we have a tendency to take it seriously, add it to the mind-fortress, and build on top of it even if it clearly doesn't belong there. Confirmation bias is everywhere and can also be interpreted as an echo-chamber when in a group of people who are all echoing the same viewpoints.

Loss aversion

Losing what we have is much more mentally damaging than gaining that same asset. For proof of this look no farther to historic stock market crashes of traders who lost everything jumping out of windows to their death. There are plenty of people who can and do live happily under the blanket of poverty, but once a person has a certain "standard of living" it's hard for them to mentally downgrade. This is even reflected in the court system when it comes to child support and divorce. The amount of money that needs to be paid is not a flat fee and is directly correlated to the previous standard.

I've been the victim of loss aversion many many times when I was a professional poker player. Losing $1000 hurts way more than making $1000. If I was up $1000 simply the fear that I might lose it often prevented me from playing more hours and making more money on the average. I didn't care; I just wanted to not lose what I had in that moment. Being in the red $1000 was obviously even worse and the crippling realization that I'd need to grind out of the hole I was in often led to tilted play that made the problem even worse.

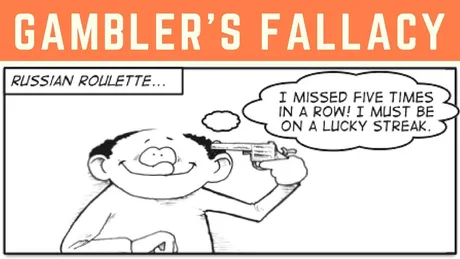

Gamblers Fallacy

The gambler's fallacy, also known as the Monte Carlo fallacy, occurs when an individual erroneously believes that a certain random event is less likely or more likely to happen based on the outcome of a previous event or series of events.

This is also one I have discussed a dozen times in previous blogs. I didn't actually know it had a name. Gamblers Fallacy would be like going into a casino and thinking that a slot machine that hasn't hit in a while is "due" to win. Conversely gamblers often ironically use it for the exact opposite scenario: a machine that has hit many times is "hot" and will hit again. Previous random events do not effect the future. This is one of the biggest false patterns out there that many whole-heartedly believe to be true.

It applies to many other events other than just gambling.

Take for example Pavlov's experiment. All he did was ring a bell when he fed the test dog. He did this many times, and then one day he rang the bell but did not feed the dog. The dog salivated and drooled, only because it heard the bell; there were no other context clues. So as we can see Gambler's Fallacy can also be the basis of pattern conditioning using completely random and unrelated stimulus such as sound to represent physical food and the idea of eating within the brain. This is an incredibly powerful bias when applied in such out-of-the-box context.

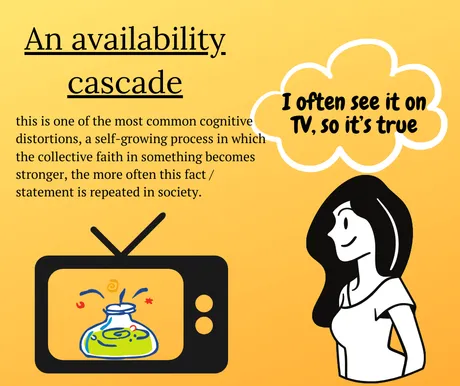

Availability Cascade

Similar to an echo-chamber, people will more likely believe information if they hear it many times from different sources. This is the main bias that makes blatant propaganda so effective. Someone hears something on the news, then they hear it from a friend, then they hear it from their boss, and then grandma... etc etc. Now they believe that the thing has a very high chance of being true even though it all came from a single hidden source in the background.

The Framing Effect

Here's another one that I've noticed dozens of times in my life and didn't realize it actually had a name. The framing effect is a classic tool of subtle data manipulation. Is the glass half empty, or half full? Both are true but one helps us frame the argument in the way that we want to frame it. This is sometimes a very hard one to catch because we can't claim that the speaker is wrong, only that they've manipulated the data to fit their agenda.

I suppose a good example of this was COVID 2020 when the media was purposefully trying to create mass hysteria over the common flu. Piles of dead bodies everywhere. Never using percentages, and instead framing the issue as hundreds of thousands or millions dead. Four years later and the world population is exactly the same (higher?). If all we had was data from the framing effect we might have guessed that half the population died like it was an actual deadly plague. This framing was purposeful and deliberate, and it was so effective that it was parroted by millions of terrified citizens which led to availability cascade and echo-chamber. It could have just as easily gone the other way if that's how the powers that be decided to play it.

Bandwagon Effect

Here's one that easy to guess from context. If your friend jumped off a bridge, would you? The Bandwagon Effect turns an argument into a popularity contest. Whoever has the most votes must surely be the winner... because that's how it works, right? I have bad news: the truth is not a democracy.

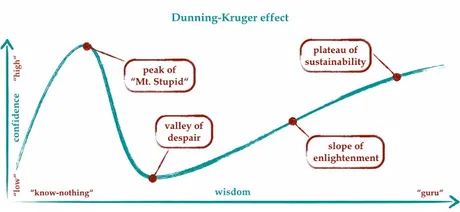

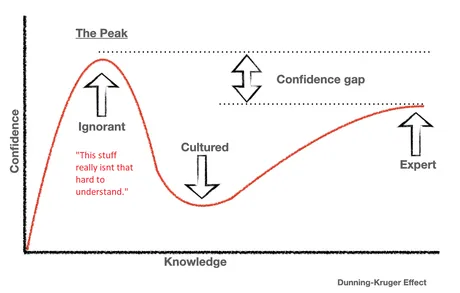

Dunning-Kruger Effect

Arguably the most annoying one of all, the Dunning-Kruger Effect is where all the know-it-alls live. After a little research and a bit more understanding than the general public, we tend to think we are experts long long before actually being an expert. It is only by surrounding ourselves by actual experts and making a fool of ourselves a couple times do we begin to realize that our grasp of the topic is middling at best.

So begins that long journey of scouring the rabbit hole and achieving more nuanced, balanced, and complex perspective. Even the masters at the top of their class will not be as confident as those sitting on Ignorance Peak. There's always more to learn and an infinite amount of ways to get it wrong. Masters got where they are today by being wrong many times over; more than anyone else.

Those at the "peak of Mt. Stupid" tend to greatly oversimply the complexity of the underlying topics (using a Frame that suits their argument) in order to make reductive points that completely fall apart in the face of actual insight and scrutiny. The problem with this is that the majority of the population can't defend themselves from this kind of nonsense because they don't know any better than the know_it_all speaker who's done 5 hours of research.

Conclusion

These biases exist for a reason. It's easy to make the claim that we should "do better" and they "shouldn't exist", but they absolutely need to exist as they are a direct result of how we process the world. To remove the bias and pattern recognition is like throwing the baby out with the bathwater. This is not a viable option in any capacity.

What we can do is be aware of these fallacies and try to check ourselves and others when they poke up their heads. Being unbiased and worldly with multiple perspectives is quite possibly one of the hardest things to do on a psychological level. Those who attempt and fail can end up in a place that's even worse than the biases themselves. That doesn't mean we shouldn't try, only that we should be careful. We not only have to expect failure, but embrace it. This is the core principal of all learning and mastery.

Return from Cognitive Bias Fallacy to edicted's Web3 Blog